The magnetic north pole, also known as true north, has begun moving south. It had been moving toward the north pole thought the last century. It moved out of Canadian waters about 15 years ago, heading toward Russia. This year it passed as close to the North pole as it is likely to, and begun heading south (Das Vedanga, old friend). So this might be a good time to ask “why is it moving?” or better yet, “Why does it exist at all?” Sorry to say the Wikipedia page is little help here; what little they say looks very wrong. So I thought I’d do my thing and write an essay.

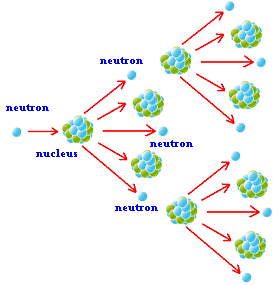

Migration of the magnetic (true) north pole over the last century; it’s at 8°N and just passed the North Pole.

Your first assumption of the cause of the earth’s magnetic field would involve ferromagnetism: the earth’s core is largely iron and nickel, two metals that permanent magnets. Although the earth’s core is very hot, far above the “Curie Temperature” where permanent magnets form, you might imagine that some small degree of magnetizability remains. You’d be sort of right here and sort of wrong; to see why, lets take a diversion into the Curie Temperature (Pierre Curie in this case) before presenting a better explanation.

The reason there is no magnetism above the Curie temperature is similar to the reason that you can’t have a plague outbreak or an atom bomb if R-naught is less than one. Imagine a magnet inside a pot of iron. The surrounding iron will dissipate some of the field because magnets are dipoles and the iron occupies space. Fixed dipole effects dissipate with a distance relation of r-4; induced dipoles with a relation r-6. The iron surrounding the magnet will also be magnetized to an extent that augments the original, but the degree of magnetization decreases with temperature. Above some critical temperature, the surrounding dissipates more than it adds and the effect is that the original magnetic effect will die out if the original magnet is removed. It’s the same way that plagues die out if enough people are immunized, discussed earlier.

The earth rotates, and the earth’s surface is negatively charged. There is thus some room for internal currents.

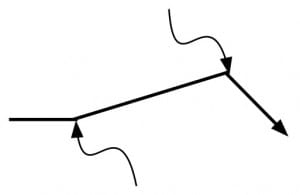

It seems that the earth’s magnetic field is electromagnetic; that is, it’s caused by a current of some sort. According to Wikipedia, the magnetic field of the earth is caused by electric currents in the molten iron and nickel of the earth’s core. While there is a likely current within the core, I suspect that the effect is small. Wikipedia provides no mechanism for this current, but the obvious one is based on the negative charge of the earth’s surface. If the charge on the surface is non-uniform, It is possible that the outer part of the earth’s core could become positively charged rather the way a capacitor charges. You’d expect some internal circulation of the liquid the metal of the core, as shown above – it’s similar to the induced flow of tornadoes — and that flow could induce a magnetic field. But internal circulation of the metallic core does not seem to be a likely mechanism of the earth’s field. One problem: the magnitude of the field created this way would be smaller than the one caused by rotation of the negatively charged surface of the earth, and it would be in the opposite direction. Besides, it is not clear that the interior of the planet has any charge at all: The normal expectation is for charge to distribute fairly uniformly on a spherical surface.

The TV series, NOVA presents a yet more unlikely mechanism: That motion of the liquid metal interior against the magnetic field of the earth increases the magnetic field. The motion of a metal in a magnetic field does indeed produce a field, but sorry to say, it’s in the opposing direction, something that should be obvious from conservation of energy.

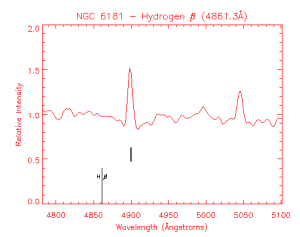

The true cause of the earth’s magnet field, in my opinion, is the negative charge of the earth and its rotation. There is a near-equal and opposite charge of the atmosphere, and its rotation should produce a near-opposite magnetic field, but there appears to be enough difference to provide for the field we see. The cause for the charge on the planet might be due to solar wind or the ionization of cosmic rays. And I notice that the average speed of parts of the atmosphere exceeds that of the surface — the jet-stream, but it seems clear to me that the magnetic field is not due to rotation of the jet stream because, if that were the cause, magnetic north would be magnetic south. (When positive charges rotate from west to east, as in the jet stream, the magnetic field created in a North magnetic pole a the North pole. But in fact the North magnetic pole is the South pole of a magnet — that’s why the N-side of compasses are attracted to it, so … the cause must be negative charge rotation. Or so it seems to me. Supporting this view, I note that the magnet pole sometimes flips, north for south, but this is only following a slow decline in magnetic strength, and it never points toward a spot on the equator. I’m going to speculate that the flip occurs when the net charge reverses, thought it could also come when the speed or charge of the jet stream picks up. I note that the magnetic field of the earth varies through the 24 hour day, below.

Although magnetic north is now heading south, I don’t expect it to flip any time soon. The magnetic strength has been decreasing by about 6.3% per century. If it continues at that rate (unlikely) it will be some 1600 years to the flip, and I expect that the decrease will probably slow. It would probably take a massive change in climate to change the charge or speed of the jet stream enough to reverse the magnetic poles. Interestingly though, the frequency of magnetic strength variation is 41,000 years, the same frequency as the changes in the planet’s tilt. And the 41,000 year cycle of changes in the planet’s tilt, as I’ve described, is related to ice ages.

Now for a little math. Assume there are 1 mol of excess electrons on a large sphere of the earth. That’s 96500 Coulombs of electrons, and the effective current caused by the earth’s rotation equals 96500/(24 x3600) = 1.1 Amp = i. The magnetic field strength, H = i N µ/L where H is magnetizability field in oersteds, N is the number of turns, in this case 1, µ is the magnetizability. The magnetizability of air is 0.0125 meter-oersteds/ per ampere-turn, and that of a system with an iron core is about 200 times more, 2.5 meter-tesla/ampere-turn. L is a characteristic length of the electromagnet, and I’ll say that’s 10,000 km or 107 meters. As a net result, I calculate a magnetic strength of 2.75×10-7 Tesla, or .00275 Gauss. The magnet field of the earth is about 0.3 gauss, suggesting that about 100 mols of excess charge are involved in the earth’s field, assuming that my explanation and my math are correct.

At this point, I should mention that Venus has about 1/100 the magnetic field of the earth despite having a molten metallic core like the earth. It’s rotation time is 243 days. Jupiter, Saturn and Uranus have greater magnetic fields despite having no metallic cores — certainly no molten metallic cores (some theorize a core of solid, metallic hydrogen). The rotation time of all of these is faster than the earth’s.

Robert E. Buxbaum, February 3, 2019. I have two pet peeves here. One is that none of the popular science articles on the earth’s magnetic field bother to show math to back their claims. This is a growing problem in the literature; it robs science of science, and makes it into a political-correctness exercise where you are made to appreciate the political fashion of the writer. The other peeve, related to the above concerns the game it’s thoroughly confusing, and politically ego-driven. The gauss is the cgs unit of magnetic flux density, this unit is called G in Europe but B in the US or England. In the US we like to use the tesla T as an SI – mks units. One tesla equals 104 gauss. The oersted, H is the unit of magnetizing field. The unit is H and not O because the English call this unit the henry because Henry did important work in magnetism. One ampere-turn per meter is equal to 4π x 10−3 oersted, a number I approximated to 0.125 above. But the above only refers to flux density; what about flux itself? The unit for magnetic flux is the weber, Wb in SI, or the maxwell, Mx in cgs. Of course, magnetic flux is nothing more than the integral of flux density over an area, so why not describe flux in ampere-meters or gauss-acres? It’s because Ampere was French and Gauss was German, I think.